Key takeaways

Bot attacks directly influence the decision-making and performance of a website. Malicious traffic is a direct threat to analytics, which can lead to inflated conversion numbers and drastically increase the key metrics to twist your perception. Despite that, only 2.8% of websites are reported to be fully protected from bot threats. This guide covers the key points you should know about the bot activity and how to decrease its influence on your business.

Bot traffic describes visits to your resource generated by automated software, also known as bots, rather than by legitimate users. These can be search engine crawlers, responsible for web scraping, or malicious bots that generate fake clicks and imitate network traffic. Understanding this and being able to identify bots with harmful intentions and automated attacks is crucial for getting accurate performance data and maintaining a safe and secure website environment.

There are several ways in which bot malicious activity and automated threats can impact your website performance and the quality of analytics data you get as a result.

All these factors overall negatively impact customer experience on your website and may lead to low ratings, bad traffic, not working features, or even complete lockage of the resource. You must be able to identify bot activity and stop bot attacks to preserve brand reputation and keep providing quality services to your customers.

To be able to oppose the bot threats and choose the best way to counter them, it is desired to know how to categorize malicious bot activity. There are 5 main types of so-called bad bots that can impact your company's performance.

Not all bots, however, flood websites with fake traffic or commit account takeovers. Certain types of bots are designed to assist users with different tasks, improve their experience, and help with online promotion.

While good bot activity often remains unnoticed in the background, content scraping, spamming, and credentials theft are extremely dangerous. It is crucial to be able to detect and block bad bots quickly and effectively to minimize their influence on the product.

To be able to identify bot traffic on your website, you need to analyze user behavior and utilize the advanced technologies of bot detection.

| Unusual duration of the session | Bots often visit websites for a millisecond to achieve a specific goal or have extremely long sessions, trying to imitate real user behavior. |

| Anomalously high bounce rate | If a bot is aimed at boosting the bounce rate for your website, it can simply access the page and leave it immediately, without any interaction. |

| Spikes in the request numbers | A sudden rise in pageview numbers or a spiking number of requests from a single IP address may be indicators of a DDoS attack. |

| Fake conversions | Numerous forms filled with senseless or fake data may indicate online fraud aimed at inflating conversion rates. |

| Unexpected traffic origins | Traffic from new/unexpected GEOs as well as from IPs that belong to known data centers often indicates spam and bot attacks. |

| Artificial click and scroll behavior | More expensive and sophisticated bots can already mimic human behaviour, which is one of the biggest evolving threats in this domain. Other bots, however, are detectable by straight and repetitive mouse movements and unnatural scrolling patterns. |

It is highly desirable to constantly check and analyze website traffic and user behavior, especially if some unnatural patterns are noticed. In addition to that, certain proactive measures can be taken to identify bot attacks.

| Honeypots | Hidden fields are added to websites to detect bot activity. They are invisible to human visitors, but bots often fill them automatically. |

| IP Blacklisting | Check the IPs of incoming requests against the existing databases of malicious IPs. |

| Analyzing bot traffic user-agents | Bots often use spoofed user-agent strings with information referring to a specific user's device that does not match the actual data. |

To boost your bot management and identification capabilities, you can integrate behavioral analysis with machine learning. This way, you can create a template of acceptable human-like behaviour and flag all deviations as bot attacks.

Identifying malicious traffic is not enough. Knowing how to stop bot traffic and bot mitigation practices is crucial for ensuring that your project does not suffer from spam attacks, web scraping, or other bots. Here are the 10 best practices to stop bots from attacking your resources.

Of course, it is hard to follow all the best practices at the same time. Thus, we recommend that you identify the ones that are more relevant to your product (for instance, there is not much sense to use GEO filtering for international products that might have clients worldwide), and use them with the maximum uptime possible.

Knowing the best practices to stop bot traffic is essential, but what about specific bot management solutions that can help you with malicious traffic? Here are some top titles you can try.

This is an ML-based tool that uses different AI models to create a human-like behavioral pattern and then assigns points (from 1 to 99) to every request depending on its similarity with this pattern. Consequently, the lower this number is, the higher the likelihood that the reviewed request belongs to a bot.

Depending on this score, you can block the requests, challenge them, or require a CAPTCHA check. Cloudflare Bot Management also considers other factors, such as IP or ASN, which makes it even more reliable and precise. In addition to that, the company highlights that its service only checks potentially malicious requests and does not block bots from Google or assistant chatbots, so your search engine rankings should not be influenced.

CBM's downsides mainly come to price and configuration complexity. Costs for the service scale up proportionally to the traffic; for the best quality of protection, you may need to switch to the higher-tier payment plans and activate addons. Misconfiguration may lead to blocking bots from systems like Google.

Stape is not a bot mitigation platform. It is a server-side tracking services provider, and it has several power-ups aimed at protecting your analytics from spam traffic and fake conversions.

Stape's Bot Detection solution adds two headers to every incoming request. One returns "true" or "false", and the other returns a number from 1 to 100. Unlike CBM, 100 in this case means that the request is very possibly generated by a bot. The same goes for the header's "true value". If bot traffic is detected, this power-up stops the server GTM container tags from firing, helping you to keep your analytical data clean.

Block Request by IP power-up allows you to block up to 30 addresses and exclude all their traffic from your analytics and GTM events. IP blocking can be extremely helpful if you know the sources of potential bot threats and want to prevent bot traffic.

A little off-topic, but still useful - Open Container for Bot Index. While it does not detect bad bot traffic nor can it block them somehow, this power-up optimizes your website for search engines and their crawlers, enhancing your data collection activity.

ModSecurity is an open source online firewall engine. It is entirely free to use and can be added as a module to a server or as a connector to a web application. It has a default set of rules and allows for the creation of custom ones.

ModSecurity bot blocker checks every incoming request for correspondence with the existing rules and fires the selected action if any inconsistencies are detected. Possible reactions to potentially bot requests are blocking, redirecting, or modifying the request.

The service supports two modes: bot prevention, already described above, and detection. In detection mode, the service only saves logs for all the suspicious incoming requests, but does not commit any actions.

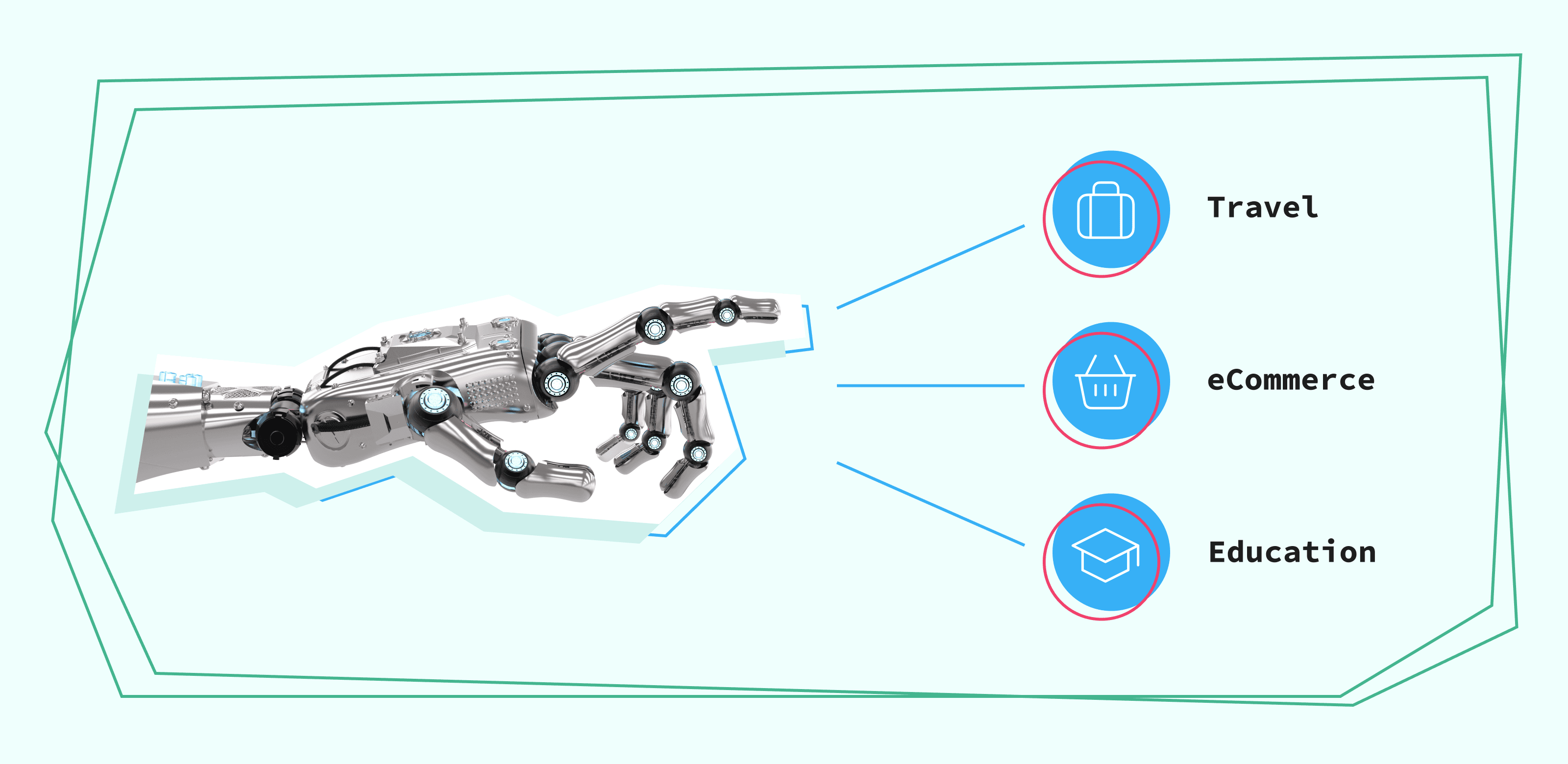

Although it may seem that the financial sector is at most risk of bot attacks due to the potential for payment fraud, statistics demonstrate that it is not even in the top 3, with only 8% of all attacks targeting financial services. The most endangered industries in 2025 are travel (27%), retail (15%), and education (11%).

The travel industry often suffers from bot attacks because it provides one of the easiest ways to gain unauthorized access to personal data. Booking and rental websites, air companies, and travel agencies often do not have the same level of data protection as banks and financial institutions. Bots can scrape such resources for personal and sensitive information about their clients. Another popular destination is leaving fake reviews, artificially inflating the demand, or damaging the reputation of legitimate businesses.

Retail and eCommerce industries suffer due to high competition rates and the relative simplicity of getting what is needed compared to the potential benefits. Competitors often use scraping bots to gather price and availability data and adjust their resources accordingly. Other bots are used for inventory hoarding and creating artificial scarcity by automatically purchasing products until they are out of stock. Some bots may even try accessing customer profiles and use their credentials and financial data for fraudulent purchases.

Malicious bots in the educational industry hunt for students' and workers' personal data, fill fake applications to make it more difficult for admissions commissions to process the real ones, and assist with IP theft. The latter is the most dangerous aspect. Scientific papers illegally republished elsewhere can lead to plagiarism issues, research ideas and projects can be stolen and relaunched without mentioning authorship.

When you establish a strong and effective bot blocking system, the first and foremost thing is that the information stored on your resources will be safe. You may not worry about your customer data being stolen or your analytics being overwhelmed with fake conversion events. Of course, this launches the loop of quality data → better analytics → more profound understanding of your customers → more effective strategies →optimized ad spend → profits.

Another positive aspect here is that block detection improves the performance of your website. When not overloaded by fake requests, it runs faster and has better load time metrics, improving overall customer experience. Generally, it is safe to say that businesses that filter bot traffic will always have an advantage over those that do not.

Comments