Key takeaways

Marketing measurement is one of the most important topics for marketers in 2025-2026. Privacy rules, tracking limits, and noisy attribution make it hard to trust standard dashboards. To fix this, more brands use two approaches together: conversion lift studies and marketing mix modeling.

CLS gives you experimental proof for specific campaigns, MMM turns long-term data into always-on budget advice, and server-side tracking resides under both, providing a way to make sure the numbers you use are as complete and consistent as possible.

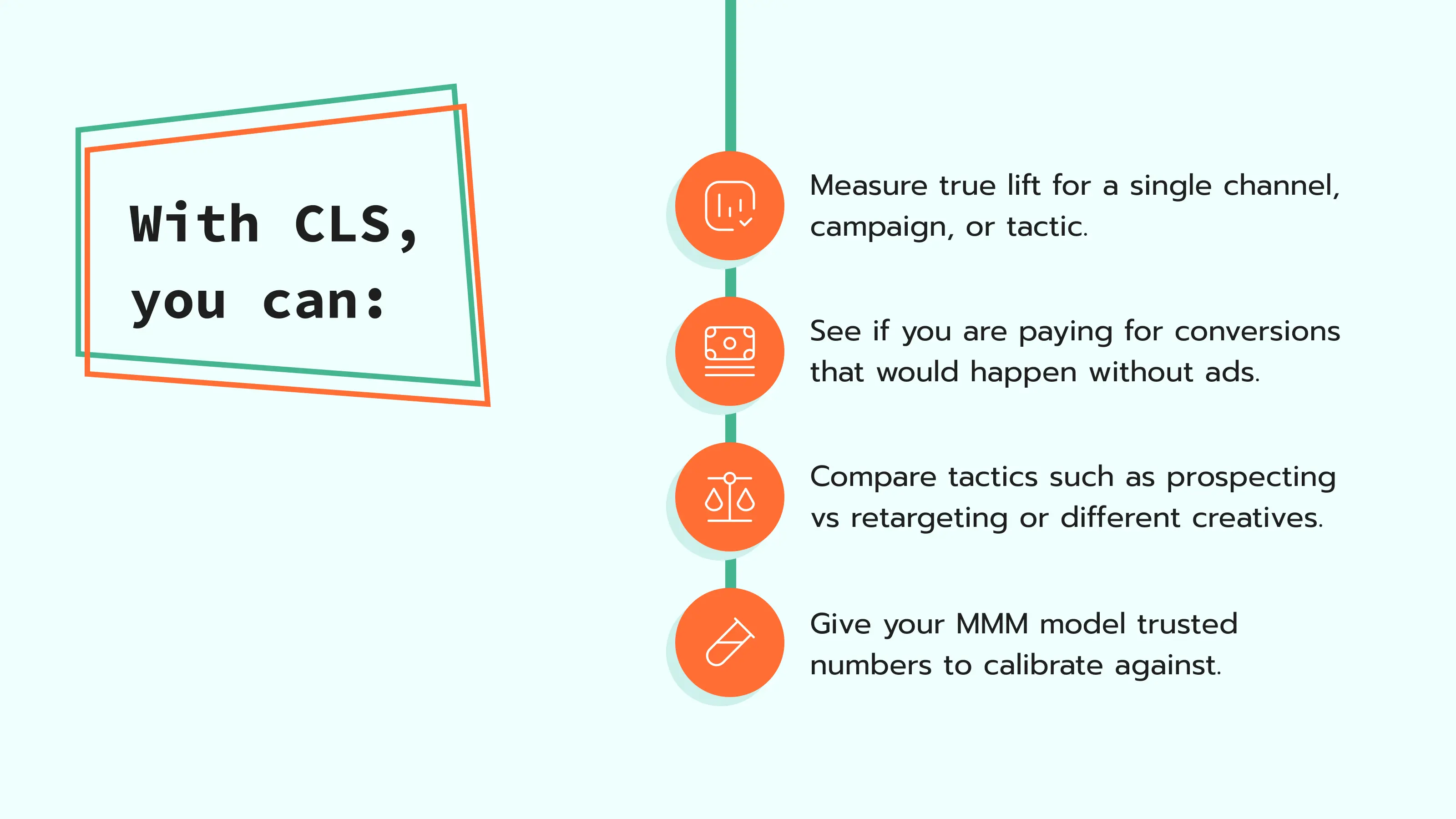

Conversion lift studies are randomized controlled experiments that measure how many extra conversions your ads create.

CLS splits your audience into two groups and compares them:

The main question CLS helps you answer is: "Did this campaign cause conversions that would not have happened anyway?"

The idea of CLS comes from traditional scientific tests. In medicine, for example, part of a carefully chosen group receives the real drug, and part of an equivalent group receives a placebo. After some time, scientists compare health results between the two groups to see the real effect of the drug.

In digital advertising, the "treatment" is ad exposure, and the "health result" is your business outcome, such as a sale, lead, or sign-up. Platforms like Meta, Google, and TikTok help create these groups, then report if the test group converts more than the control group. The difference between them is the incremental lift from your ads.

CLS has become popular because it helps marketers and business owners to get close to "ground truth" for specific campaigns. Instead of asking "how many conversions are attributed in the platform," it asks "how many extra conversions did this campaign actually create?"

The result is fewer guesses and less time spent arguing about attribution windows.

Marketing mix modeling, or MMM, is a statistical method that uses aggregated data to show how different channels and factors affect sales or revenue. Instead of tracking each user, MMM works with daily or weekly time series, such as:

The MMM model separates the baseline (sales that happen anyway) from the part that comes from marketing activities. It then estimates ROI and marginal ROI per channel and helps answer questions such as:

Modern MMM tools also use results from experiments like CLS as inputs. This makes their estimates closer to reality and increases confidence when optimizing your media mix.

CLS and MMM both try to solve the same core problem: measure the incremental impact of your marketing. They just do it in different ways:

Used together, they form a loop.

Let's say you run lift tests on channels such as Meta, Google Search, or TikTok. You learn, for example, that Meta brings +15% incremental lift, Google Search +8%, and TikTok +22%, under the conditions of the test.

Then you feed these CLS results into MMM as priors or constraints. For example, when you use tools like Robyn by Meta, Meridian by Google, or PyMC-Marketing, you can ask the model to respect the lift ranges you observed in experiments.

You then use the calibrated MMM to run scenarios, move budgets, and plan the following months. CLS tells you “this campaign worked at this moment,” and MMM tells you “here is how to use that same channel inside your full mix over time.”

In short, CLS gives you local truths, MMM gives you global structure, and together they help both performance and planning.

Client-side tracking relies on browser events and so depends on many things, such as:

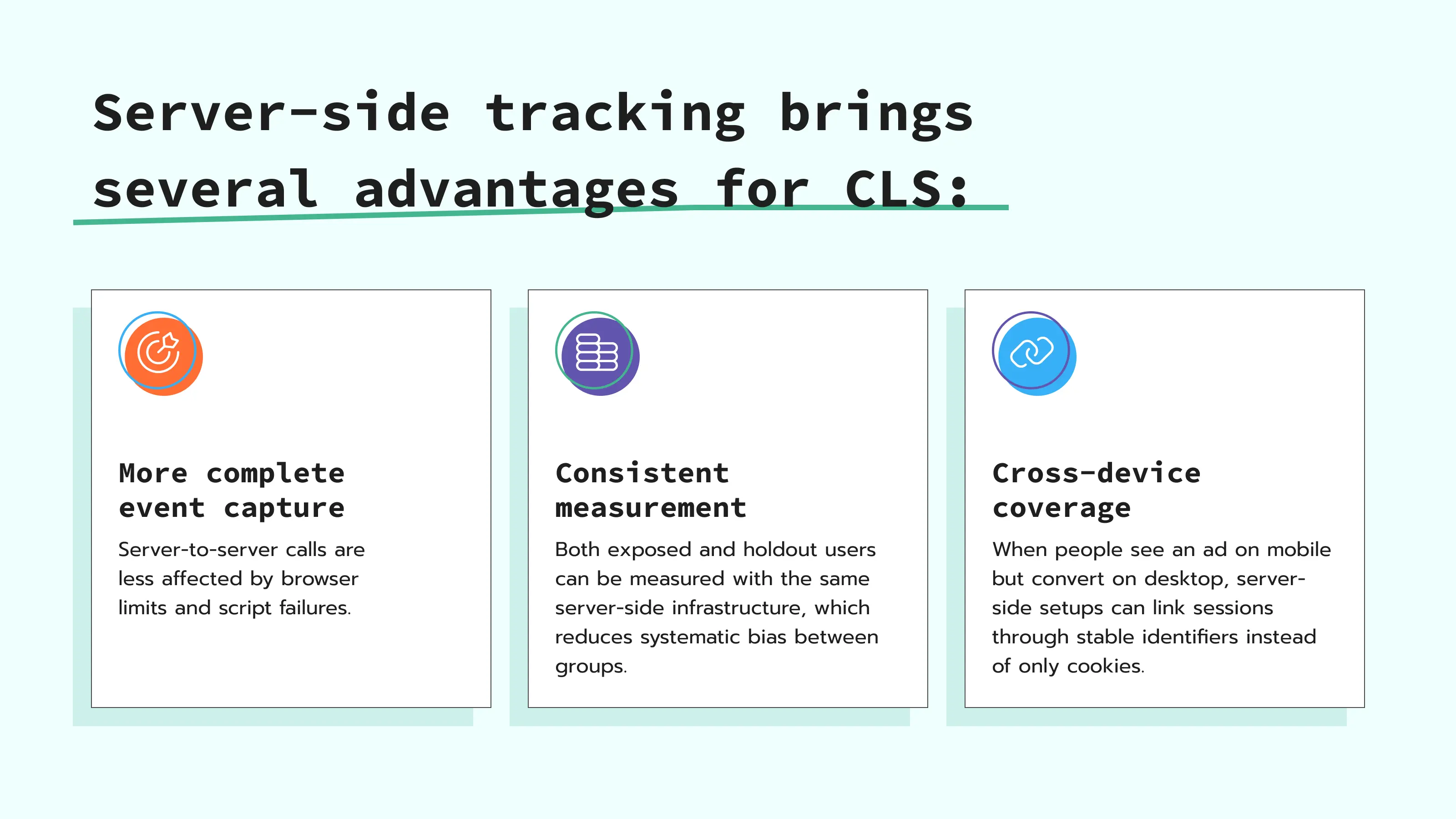

Industry estimates often state that browser-based setups can miss 10–30% of conversions in some cases. For CLS, this is a real problem because any bias in how you measure the test group vs the control group changes the lift estimate.

That is why many professionals now use server-side tracking to support the browser data. In a server-side setup, data still starts in the browser when the user visits your site or app. From there, key events are sent to a cloud server. The server then forwards the data to platforms through APIs such as Meta Conversions API or Google Ads API.

Both CLS and MMM are estimation tools. And every estimate comes with some uncertainty. If uncertainty is too high, the numbers will not help you make better decisions. You may still change budgets, but you will not be sure if those changes really improve revenue or reduce risk.

Effective tracking lowers this uncertainty.

For CLS, more complete data means:

For MMM, more complete data means:

Server-side tracking plays a central role in both. It reduces missed conversions from browser limits and sends more complete data into both CLS and MMM.

When CLS and MMM are both in place, you get a strong feedback loop for decision-making.

Here is a simple view of the cycle:

Plan and run experiments. Pick questions that matter, for example, "Is this Meta prospecting campaign incremental?" or "Does this new TikTok format bring extra sales?"

Run CLS with server-side tracking active, so test and control groups are tracked in the same way.

Update calibration. Take the new lift results and check how they compare with the current MMM outputs. Use them to adjust MMM priors or constraints, so the model respects what the corresponding experiments just proved.

Move budget to campaigns that sell. Use the calibrated MMM to run scenarios and move budgets across channels, campaigns, or regions. You now know that the model's view of each channel is in line with real-world lift test results, not just correlations.

Repeat. Every few months, repeat CLS on key areas, especially when you test new channels, creative strategies, or geos. Feed new results into MMM again.

Over time, this loop makes your measurement system stronger: CLS brings fresh experimental truth and MMM translates that truth into weekly or monthly budget decisions at scale.

You do not need to change all your tracking to improve CLS. The goal is to add a server layer under your current web tracking and use it for the events that appear in your lift studies.

Here is a simple path with Stape:

After these steps, the events you use in CLS are logged in both browser and server flows, and test and control groups are measured in the same way. This reduces missed conversions, improves matching, and gives more complete data for both CLS and MMM.

If you want a full tutorial, you can follow the step-by-step guide on how to set up server-side tracking with Stape, which explains every technical step in detail.

| This article was written in collaboration with Gabriele Franco, CEO at Cassandra. |

Comments